Khalida Sarwari, Northeastern University; Northeastern researchers team up with Accenture to offer a road map for artificial intelligence ethics oversight

"Now, Northeastern professors John Basl and Ron Sandler

are offering organizations guidance for how to create a well-designed

and effective committee based on similar models used in biomedical

research.

Maintaining that an ethics committee

that is adequately resourced and thoughtfully designed can play an

important role in mitigating digital risks and maintaining trust between

an organization and the public, the researchers provide a framework for

such a system in a new report produced in collaboration with global professional services company Accenture...

“If you want to

build a committee that works effectively and if you really want to build

ethical capacity within an organization, it’s a significant undertaking

where you can’t just throw together a few people with ethical

expertise,” says Sandler.

Added Basl: “We lay out the kinds of

experts an organization will need—someone who knows local laws, someone

who knows ethics, a variety of technical experts, and members of an

affected community. Who those individuals are, or what their particular

expertise is, depends on the kind of technology being developed and

deployed.”"

Ethically-tangled aspects of 21st century societies and cultures. In the vein of Charles Darwin’s 1859 “entangled bank” metaphor—a complex and evolving digital ecosystem of difference and dependence, where humans, technologies, ethics, law, policy, data, and information converge and diverge. Kip Currier, PhD, JD

Sunday, September 15, 2019

Northeastern researchers team up with Accenture to offer a road map for artificial intelligence ethics oversight; Northeastern University, August 29, 2019

Building data and AI ethics committees; Accenture.com, August 20, 2019

Accenture.com; Building data and AI ethics committees

"In brief

"In brief

- Organizations face a difficult challenge when it comes to ethically-informed data collection, sharing and use.

- There is growing demand for incorporating ethical considerations into products and services involving big data, AI and machine learning.

- Outside of mere legal compliance, there is little guidance on how to incorporate this ethical consideration.

- To fill this gap, Northeastern University and Accenture explore the development of effective and well-functioning data and AI ethics committees."

Saturday, September 14, 2019

How to Build an AI Ethics Committee; The Wall Street Journal, August 30, 2019

Jared Council, The Wall Street Journal; How to Build an AI Ethics Committee

"A new guidebook aims to help organizations set up data and artificial-intelligence ethics committees and better deal with the ethical issues associated with the technology."

New road map provides guidelines for starting an ethics committee for artificial-intelligence and data concerns

"A new guidebook aims to help organizations set up data and artificial-intelligence ethics committees and better deal with the ethical issues associated with the technology."

CS department hires Ethics TAs; The Brown Daily Herald, September 5, 2019

Sarah Wang, The Brown Daily Herald;

The CS department is already incoorporating ethics into its curriculum through multiple courses such as CSCI 1951I: “CS for Social Change,” but the department hopes ETAs will encourage students to view the topic as a more fundamental aspect of CS."

CS department hires Ethics TAs

Newly-hired teaching assistants to integrate ethics into computer science

"Last spring, the Department of Computer Science announced the inaugural hiring of 10 Ethics Teaching Assistants, who will develop and deliver curricula around ethics and society in five of the department’s largest courses.

The department created the ETA program to acknowledge the impact that the products and services created by computer scientists have on society, said Professor of Computer Science and Department Chair Ugur Cetintemel. Cetintemel, who helped spearhead the program, said that it was important for concentrators to think critically about the usage and possible misuse of the solutions they build. “We want our concentrators to think about the ethical and societal implications of what they do, not as an afterthought but as another fundamental dimension they should consider as they develop their work,” he said...The CS department is already incoorporating ethics into its curriculum through multiple courses such as CSCI 1951I: “CS for Social Change,” but the department hopes ETAs will encourage students to view the topic as a more fundamental aspect of CS."

Justin Weinberg, Daily Nous; Philosophers Win €17.9 Million Grant for Study of the Ethics of Disruptive Technologies

"A project on the ethics of socially disruptive technologies, led by Philip Brey, professor of philosophy of technology at the Department of Philosophy at the University of Twente and scientific director of the 4TU.Centre for Ethics and Technology, has received a €17.9 million (approximately $19.6 million) grant from the Dutch Ministry of Education, Culture and Science’s Gravitation program.

The other researchers involved in the 10-year project are Ibo van de Poel (TU Delft), Ingrid Robeyns (Utrecht University), Sabine Roeser (TU Delft), Peter-Paul Verbeek (University of Twente), and Wijnand IJsselsteijn (TU Eindhoven).

Socially disruptive technologies include artificial intelligence, robotics, nanomedicine, molecular biology, neurotechnology, and climate technology, among other things. A press release from the University of Twente describes what the researchers will be working on:

They will be developing new methods needed not only to better understand the development and implementation of the new generation of disruptive technologies, but also to evaluate them from a moral perspective and to intervene in the way technology continues to develop. This includes the development of an approach to ethical and philosophical aspects of a disruptive technology that is widely applicable. Another important aspect of the program is the cooperation between ethicists, philosophers and technical scientists aimed at finding better methods for responsible and sustainable innovation. One objective of the programme is to innovate ethics and philosophy in the broadest sense by researching how classical ethical values and philosophical concepts are being challenged by modern technology."

Orwellabama? Crimson Tide Track Locations to Keep Students at Games; The New York Times, September 12, 2019

Billy Witz, The New York Times; Orwellabama? Crimson Tide Track Locations to Keep Students at Games

Coach

Nick Saban gets peeved at students leaving routs early. An app ties

sticking around to playoff tickets, but also prompts concern from

students and privacy watchdogs.

"Greg

Byrne, Alabama’s athletic director, said privacy concerns rarely came

up when the program was being discussed with other departments and

student groups. Students who download the Tide Loyalty Points app will

be tracked only inside the stadium, he said, and they can close the app —

or delete it — once they leave the stadium. “If anybody has a phone,

unless you’re in airplane mode or have it off, the cellular companies

know where you are,” he said.

Thursday, September 12, 2019

The misinformation age; Axios, September 12, 2019

Scott Rosenberg, David Nather, Axios; The misinformation age

"Hostile powers undermining

elections. Deepfake video and audio. Bots and trolls, phishing and fake

news — plus of course old-fashioned spin and lies.

Why it matters:

The sheer volume of assaults on fact and truth is undermining trust not

just in politics and government, but also in business, tech, science

and health care as well.

- Beginning with this article, Axios is launching a series to help you navigate this new avalanche of misinformation, and illuminate its impact on America and the globe, through 2020 and beyond.

Our culture now broadly distrusts most claims to truth.

Majorities of Americans say they've lost trust in the federal

government and each other — and think that lack of trust gets in the way

of solving problems, according to a Pew Research Center survey."

'Ethics slam' packs pizzeria; The Herald Journal, September 10, 2019

Ashtyn Asay, The Herald Journal; 'Ethics slam' packs pizzeria

"The ethics slam was sponsored by the Weber State University Richard Richards Institute for Ethics, the USU Philosophy Club, and the Society for Women in Philosophy. It was organized by Robison-Greene and her husband, Richard Greene, a professor of philosophy and director of the Richard Richards Institute for Ethics.

This is the seventh ethics slam put together by Greene and Robison-Greene, whose collective goal is to encourage civil discourse and generate rich conversations within a respectful community.

This goal appeared to be met on Monday evening, as ethics slam participants engaged in polite conversation and debate for almost two hours. Opinions were challenged and controversial points were made, but Greene and Robison-Greene kept the conversation on track...

"The ethics slam was sponsored by the Weber State University Richard Richards Institute for Ethics, the USU Philosophy Club, and the Society for Women in Philosophy. It was organized by Robison-Greene and her husband, Richard Greene, a professor of philosophy and director of the Richard Richards Institute for Ethics.

This is the seventh ethics slam put together by Greene and Robison-Greene, whose collective goal is to encourage civil discourse and generate rich conversations within a respectful community.

This goal appeared to be met on Monday evening, as ethics slam participants engaged in polite conversation and debate for almost two hours. Opinions were challenged and controversial points were made, but Greene and Robison-Greene kept the conversation on track...

"The next ethics slam will be at 7 p.m. Sept. 23 at the Pleasant Valley Branch of the Weber County Library. The topic of discussion will be: “Is censorship ever appropriate?”"

Māori anger as Air New Zealand seeks to trademark 'Kia Ora' logo; The Guardian, September 12, 2019

Eleanor Ainge Roy, The Guardian; Māori anger as Air New Zealand seeks to trademark 'Kia Ora' logo

"New Zealand’s national carrier, Air New Zealand, has offended the country’s Māori people by attempting to trademark an image of the words “kia ora”; the greeting for hello."

"New Zealand’s national carrier, Air New Zealand, has offended the country’s Māori people by attempting to trademark an image of the words “kia ora”; the greeting for hello."

Wednesday, September 11, 2019

How an Élite University Research Center Concealed Its Relationship with Jeffrey Epstein; The New Yorker, September 6, 2019

Ronan Farrow, The New Yorker;

How an Élite University Research Center Concealed Its Relationship with Jeffrey Epstein

New documents show that the M.I.T. Media Lab was aware of Epstein’s status as a convicted sex offender, and that Epstein directed contributions to the lab far exceeding the amounts M.I.T. has publicly admitted.

"Current and former faculty and staff of the media lab described a pattern of concealing Epstein’s involvement with the institution. Signe Swenson, a former development associate and alumni coordinator at the lab, told me that she resigned in 2016 in part because of her discomfort about the lab’s work with Epstein. She said that the lab’s leadership made it explicit, even in her earliest conversations with them, that Epstein’s donations had to be kept secret...

Swenson said that, even though she resigned over the lab’s relationship with Epstein, her participation in what she took to be a coverup of his contributions has weighed heavily on her since. Her feelings of guilt were revived when she learned of recent statements from Ito and M.I.T. leadership that she believed to be lies. “I was a participant in covering up for Epstein in 2014,” she told me. “Listening to what comments are coming out of the lab or M.I.T. about the relationship—I just see exactly the same thing happening again.”"

He Who Must Not Be Tolerated; The New York Times, September 8, 2019

Kara Swisher, The New York Times;

He Who Must Not Be Tolerated

Joi

Ito’s fall from grace for his relationship with Jeffrey Epstein was

much deserved. But his style of corner-cutting ethics is all too common

in tech.

"Voldemort?

Of all the terrible details of the gross fraud that the former head of the M.I.T. Media Lab, Joichi Ito, and his minions perpetrated in trying to cover up donations

by Jeffrey Epstein to the high-profile tech research lab, perhaps

giving a pedophile a nickname of a character in a book aimed at children

was the most awful.

“The effort to

conceal the lab’s contact with Epstein was so widely known that some

staff in the office of the lab’s director, Joi Ito, referred to Epstein

as Voldemort or ‘he who must not be named,’ ” wrote Ronan Farrow in The

New Yorker, in his eviscerating account of the moral and leadership failings of one of the digital industry’s top figures."

The Moral Rot of the MIT Media Lab; Slate, September 8, 2019

Justin Peters, Slate; The Moral Rot of the MIT Media Lab

"Over the course of the past century, MIT became one of the best brands in the world, a name that confers instant credibility and stature on all who are associated with it. Rather than protect the inherent specialness of this brand, the Media Lab soiled it again and again by selling its prestige to banks, drug companies, petroleum companies, carmakers, multinational retailers, at least one serial sexual predator, and others who hoped to camouflage their avarice with the sheen of innovation. There is a big difference between taking money from someone like Epstein and taking it from Nike or the Department of Defense, but the latter choices pave the way for the former."

"Over the course of the past century, MIT became one of the best brands in the world, a name that confers instant credibility and stature on all who are associated with it. Rather than protect the inherent specialness of this brand, the Media Lab soiled it again and again by selling its prestige to banks, drug companies, petroleum companies, carmakers, multinational retailers, at least one serial sexual predator, and others who hoped to camouflage their avarice with the sheen of innovation. There is a big difference between taking money from someone like Epstein and taking it from Nike or the Department of Defense, but the latter choices pave the way for the former."

Thursday, September 5, 2019

AI Ethics Guidelines Every CIO Should Read; Information Week, August 7, 2019

John McClurg, Information Week; AI Ethics Guidelines Every CIO Should Read

"Technology experts predict the rate

of adoption of artificial intelligence and machine learning will

skyrocket in the next two years. These advanced technologies will spark

unprecedented business gains, but along the way enterprise leaders will

be called to quickly grapple with a smorgasbord of new ethical dilemmas.

These include everything from AI algorithmic bias and data privacy

issues to public safety concerns from autonomous machines running on AI.

Because AI technology and use cases are changing so rapidly, chief

information officers and other executives are going to find it difficult

to keep ahead of these ethical concerns without a roadmap. To guide

both deep thinking and rapid decision-making about emerging AI

technologies, organizations should consider developing an internal AI

ethics framework."

Does the data industry need a code of ethics?; The Scotsman, August 29, 2019

David Lee, The Scotsman; Does the data industry need a code of ethics?

"Docherty says the whole area of data ethics is still emerging: “It’s where all the hype is now – it used to be big data that everyone talked about, now it’s data ethics. It’s fundamental, and embedding it across an organisation will give competitive advantage.”

So what is The Data Lab, set up in 2015, doing itself in this ethical space? “We’re ensuring data ethics training is baked in to the core technology training of all Masters students, so they are asking all the right questions,” says Docherty."

"Docherty says the whole area of data ethics is still emerging: “It’s where all the hype is now – it used to be big data that everyone talked about, now it’s data ethics. It’s fundamental, and embedding it across an organisation will give competitive advantage.”

So what is The Data Lab, set up in 2015, doing itself in this ethical space? “We’re ensuring data ethics training is baked in to the core technology training of all Masters students, so they are asking all the right questions,” says Docherty."

Teaching ethics in computer science the right way with Georgia Tech's Charles Isbell; TechCrunch, September 5, 2019

Greg Epstein, TechCrunch; Teaching ethics in computer science the right way with Georgia Tech's Charles Isbell

"The new fall semester is upon us, and at elite private colleges and universities, it’s hard to find a trendier major than Computer Science. It’s also becoming more common for such institutions to prioritize integrating ethics into their CS studies, so students don’t just learn about how to build software, but whether or not they should build it in the first place. Of course, this begs questions about how much the ethics lessons such prestigious schools are teaching are actually making a positive impression on students.

But at a time when demand for qualified computer scientists is skyrocketing around the world and far exceeds supply, another kind of question might be even more important: Can computer science be transformed from a field largely led by elites into a profession that empowers vastly more working people, and one that trains them in a way that promotes ethics and an awareness of their impact on the world around them?

Enter Charles Isbell of Georgia Tech, a humble and unassuming star of inclusive and ethical computer science. Isbell, a longtime CS professor at Georgia Tech, enters this fall as the new Dean and John P. Imlay Chair of Georgia Tech’s rapidly expanding College of Computing."

"The new fall semester is upon us, and at elite private colleges and universities, it’s hard to find a trendier major than Computer Science. It’s also becoming more common for such institutions to prioritize integrating ethics into their CS studies, so students don’t just learn about how to build software, but whether or not they should build it in the first place. Of course, this begs questions about how much the ethics lessons such prestigious schools are teaching are actually making a positive impression on students.

But at a time when demand for qualified computer scientists is skyrocketing around the world and far exceeds supply, another kind of question might be even more important: Can computer science be transformed from a field largely led by elites into a profession that empowers vastly more working people, and one that trains them in a way that promotes ethics and an awareness of their impact on the world around them?

Enter Charles Isbell of Georgia Tech, a humble and unassuming star of inclusive and ethical computer science. Isbell, a longtime CS professor at Georgia Tech, enters this fall as the new Dean and John P. Imlay Chair of Georgia Tech’s rapidly expanding College of Computing."

His Cat’s Death Left Him Heartbroken. So He Cloned It.; The New York Times, September 4, 2019

Sui-Lee Wee, The New York Times; His Cat’s Death Left Him Heartbroken. So He Cloned It.

"China’s genetics know-how is growing

rapidly. Ever since Chinese scientists cloned a female goat in 2000,

they have succeeded in producing the world’s first primate clones, editing the embryos of monkeys to insert genes associated with autism and mental illness, and creating superstrong dogs

by tinkering with their genes. Last year, the country stunned the world

after a Chinese scientist announced that he had created the world’s first genetically edited babies.

Pet

cloning is largely unregulated and controversial where it is done, but

in China the barriers are especially low. Many Chinese people do not

think that using animals for medical research or cosmetics testing is

cruel, or that pet cloning is potentially problematic. There are also no

laws against animal cruelty."

Wednesday, September 4, 2019

What's The Difference Between Compliance And Ethics?; Forbes, May 9, 2019

Bruce Weinstein, Forbes; What's The Difference Between Compliance And Ethics?

"As important as both compliance and ethics are, ethics holds us to a higher standard, in my view. It's crucial to respect your institution's rules and policies, as well as the relevant laws and regulations, but your duties don't stop there.

High-character leaders ask, "What is required of me?" but they don't leave it at that. Ethical leaders also ask, "What is the right thing to do? How would an honorable person behave in this situation?"

The Ethics of Hiding Your Data From the Machines; Wired, August 22, 2019

Molly Wood, Wired;

"In the case of the company I met with, the data collection they’re doing is all good. They want every participant in their longitudinal labor study to opt in, and to be fully informed about what’s going to happen with the data about this most precious and scary and personal time in their lives.

But when I ask what’s going to happen if their company is ever sold, they go a little quiet."

The Ethics of Hiding Your Data From the Machines

"In the case of the company I met with, the data collection they’re doing is all good. They want every participant in their longitudinal labor study to opt in, and to be fully informed about what’s going to happen with the data about this most precious and scary and personal time in their lives.

But when I ask what’s going to happen if their company is ever sold, they go a little quiet."

Regulators Fine Google $170 Million for Violating Children’s Privacy on YouTube; The New York Times, September 4, 2019

Natasha Singer and Kate Conger, The New York Times;

Regulators Fine Google $170 Million for Violating Children’s Privacy on YouTube

"Google on Wednesday agreed to pay a record $170 million fine and to make changes to protect children’s privacy on YouTube, as regulators said the video site had knowingly and illegally harvested personal information from youngsters and used that data to profit by targeting them with ads.

The measures were part of a settlement with the Federal Trade Commission and New York’s attorney general. They said YouTube had violated a federal children’s privacy law known as the Children’s Online Privacy Protection Act, or COPPA."

'Sense of urgency', as top tech players seek AI ethical rules; techxplore.com, September 2, 2019

techxplore.com; 'Sense of urgency', as top tech players seek AI ethical rules

"Some two dozen high-ranking representatives of the global and Swiss economies, as well as scientists and academics, met in Geneva for the first Swiss Global Digital Summit aimed at seeking agreement on ethical guidelines to steer technological development...

Microsoft president Brad Smith insisted on the importance that "technology be guided by values, and that those values be translated into principles and that those principles be pursued by concrete steps."

"We are the first generation of people who have the power to build machines with the capability to make decisions that have in the past only been made by people," he told reporters.

He stressed the need for "transparency" and "accountability ... to ensure that the people who create technology, including at companies like the one I work for remain accountable to the public at large."

"We need to start taking steps (towards ethical standards) with a sense of urgency," he said."

"Some two dozen high-ranking representatives of the global and Swiss economies, as well as scientists and academics, met in Geneva for the first Swiss Global Digital Summit aimed at seeking agreement on ethical guidelines to steer technological development...

Microsoft president Brad Smith insisted on the importance that "technology be guided by values, and that those values be translated into principles and that those principles be pursued by concrete steps."

"We are the first generation of people who have the power to build machines with the capability to make decisions that have in the past only been made by people," he told reporters.

He stressed the need for "transparency" and "accountability ... to ensure that the people who create technology, including at companies like the one I work for remain accountable to the public at large."

"We need to start taking steps (towards ethical standards) with a sense of urgency," he said."

MIT developed a course to teach tweens about the ethics of AI; Quartz, September 4, 2019

Jenny Anderson, Quartz; MIT developed a course to teach tweens about the ethics of AI

"This summer, Blakeley Payne, a graduate student at MIT, ran a week-long course on ethics in artificial intelligence for 10-14 year olds. In one exercise, she asked the group what they thought YouTube’s recommendation algorithm was used for.

“To get us to see more ads,” one student replied.

“These kids know way more than we give them credit for,” Payne said.

Payne created an open source, middle-school AI ethics curriculum

to make kids aware of how AI systems mediate their everyday lives, from

YouTube and Amazon’s Alexa to Google search and social media. By

starting early, she hopes the kids will become more conscious of how AI

is designed and how it can manipulate them. These lessons also help

prepare them for the jobs of the future, and potentially become AI

designers rather than just consumers."

Thursday, August 29, 2019

New Research Alliance Cements Split on AI Ethics; Inside Higher Ed, August 23, 2019

David Matthews, Inside Higher Ed;

New Research Alliance Cements Split on AI Ethics

"Germany, France and Japan have joined forces to fund research into “human-centered” artificial intelligence that aims to respect privacy and transparency, in the latest sign of a global split with the U.S. and China over the ethics of AI."

A Youth Camp Where No Issue Is Off Limits: Arts and crafts, water sports and roaring bonfires have been replaced by exercises in decision-making.; The New York Times, August 29, 2019

Audra D. S. Burch, The New York Times;

"Etgar 36 is a summer camp meets road trip, and campers are exposed to opposing arguments about hotly debated issues at a time when many Americans are not used to talking to people with whom they disagree. The arts and crafts, sports and roaring bonfires of traditional sleepaway summer camps have been replaced by cultural journeys and exercises in critical thinking and civic engagement.

For Billy Planer, the camp’s founder, arming young people with information and ideas is the best way to prepare them for the emerging challenges of the world. Perhaps more quickly than ever before, teenagers are pressured to take a side and have an opinion amid an unending sea of status updates on social media.

“Success for us is finding humanity in discussions with people who have opposing views,” Mr. Planer, 52, said. “We want our kids to ask questions” and “gut-check their own positions,” he said."

Arts and crafts, water sports and roaring bonfires have been replaced by exercises in decision-making.

"Etgar 36 is a summer camp meets road trip, and campers are exposed to opposing arguments about hotly debated issues at a time when many Americans are not used to talking to people with whom they disagree. The arts and crafts, sports and roaring bonfires of traditional sleepaway summer camps have been replaced by cultural journeys and exercises in critical thinking and civic engagement.

For Billy Planer, the camp’s founder, arming young people with information and ideas is the best way to prepare them for the emerging challenges of the world. Perhaps more quickly than ever before, teenagers are pressured to take a side and have an opinion amid an unending sea of status updates on social media.

“Success for us is finding humanity in discussions with people who have opposing views,” Mr. Planer, 52, said. “We want our kids to ask questions” and “gut-check their own positions,” he said."

Wednesday, August 28, 2019

What Sci-Fi Can Teach Computer Science About Ethics; Wired, 8/28/19

Gregory Barber, Wired; What Sci-Fi Can Teach Computer Science About Ethics

Schools are adding ethics classes to their computer-science curricula. The reading assignments: science fiction.

"By the time class is up, Burton, a scholar of religion by training, hopes to have made progress toward something intangible: defining the emotional stakes of technology.

That’s crucial, Burton says, because most of her students are programmers. At the University of Illinois-Chicago, where Burton teaches, every student in the computer science major is required to take her course, whose syllabus is packed with science fiction. The idea is to let students take a step back from their 24-hour hackathons and start to think, through narrative and character, about the products they’ll someday build and sell. “Stories are a good way to slow people down,” Burton says. Perhaps they can even help produce a more ethical engineer."

Schools are adding ethics classes to their computer-science curricula. The reading assignments: science fiction.

"By the time class is up, Burton, a scholar of religion by training, hopes to have made progress toward something intangible: defining the emotional stakes of technology.

That’s crucial, Burton says, because most of her students are programmers. At the University of Illinois-Chicago, where Burton teaches, every student in the computer science major is required to take her course, whose syllabus is packed with science fiction. The idea is to let students take a step back from their 24-hour hackathons and start to think, through narrative and character, about the products they’ll someday build and sell. “Stories are a good way to slow people down,” Burton says. Perhaps they can even help produce a more ethical engineer."

Thursday, July 25, 2019

Solving The Tech Industry's Ethics Problem Could Start In The Classroom; NPR, May 31, 2019

Zeninjor Enwemeka, NPR; Solving The Tech Industry's Ethics Problem Could Start In The Classroom

"Ethics is something the world's largest tech companies are being forced to reckon with. Facebook has been criticized for failing to quickly remove toxic content, including the livestream of the New Zealand mosque shooting. YouTube had to disable comments on videos of minors after pedophiles flocked to its platform.

Some companies have hired ethicists to help them spot some of these issues. But philosophy professor Abby Everett Jaques of the Massachusetts Institute of Technology says that's not enough. It's crucial for future engineers and computer scientists to understand the pitfalls of tech, she says. So she created a class at MIT called Ethics of Technology."

"Ethics is something the world's largest tech companies are being forced to reckon with. Facebook has been criticized for failing to quickly remove toxic content, including the livestream of the New Zealand mosque shooting. YouTube had to disable comments on videos of minors after pedophiles flocked to its platform.

Some companies have hired ethicists to help them spot some of these issues. But philosophy professor Abby Everett Jaques of the Massachusetts Institute of Technology says that's not enough. It's crucial for future engineers and computer scientists to understand the pitfalls of tech, she says. So she created a class at MIT called Ethics of Technology."

What Students Gain From Learning Ethics in School; KQED, May 24, 2019

Linda Flanagan, KQED; What Students Gain From Learning Ethics in School

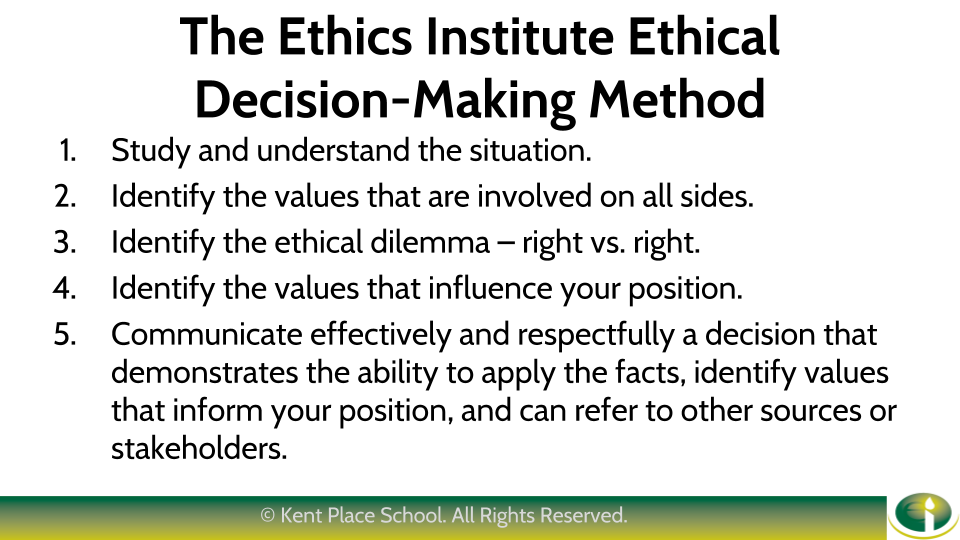

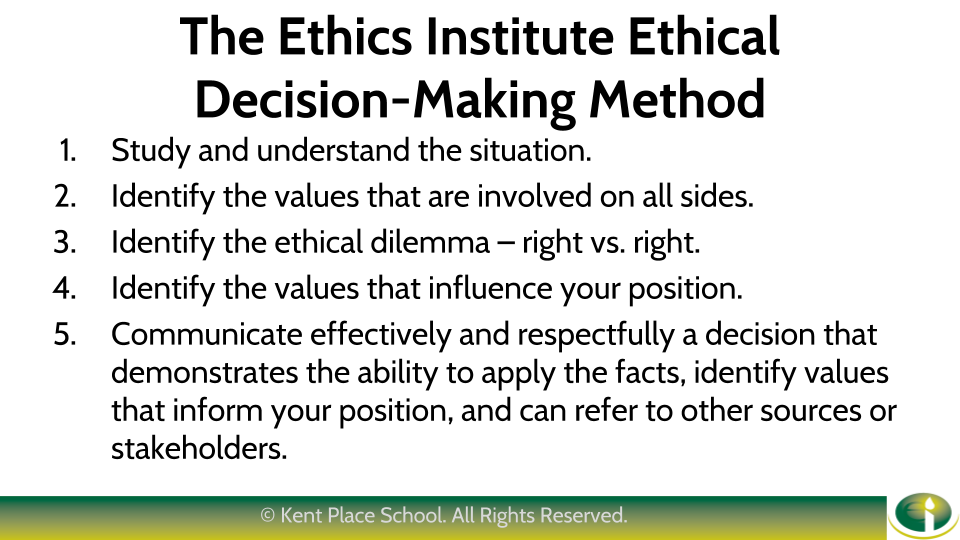

"Children at Kent Place are introduced to ethics in fifth grade, during what would otherwise be a health and wellness class. Rezach engages the students in simple case studies and invites them to consider the various points of view. She also acquaints them with the concept of right vs. right—the idea that ethical dilemmas often involve a contest between valid but conflicting values. “It’s really, really, really elementary,” she said.

In middle and upper school, the training is more structured and challenging. At the core of this education is a simple framework for ethical decision-making that Rezach underscores with all her classes, and which is captured on a poster board inside school. Paired with this framework is a collection of values that students are encouraged to study and explore. The values and framework for decision-making are the foundation of their ethics training."

"Children at Kent Place are introduced to ethics in fifth grade, during what would otherwise be a health and wellness class. Rezach engages the students in simple case studies and invites them to consider the various points of view. She also acquaints them with the concept of right vs. right—the idea that ethical dilemmas often involve a contest between valid but conflicting values. “It’s really, really, really elementary,” she said.

In middle and upper school, the training is more structured and challenging. At the core of this education is a simple framework for ethical decision-making that Rezach underscores with all her classes, and which is captured on a poster board inside school. Paired with this framework is a collection of values that students are encouraged to study and explore. The values and framework for decision-making are the foundation of their ethics training."

Daniel Callahan, 88, Dies; Bioethics Pioneer Weighed ‘Human Finitude’; The New York Times, July 23, 2019

Katharine Q. Seelye, The New York Times; Daniel

Callahan, 88, Dies; Bioethics Pioneer Weighed ‘Human Finitude’

“The scope

of his interests was impressively wide, as the Hastings Center said in an

appreciation of him, “beginning with Catholic thought and proceeding to the

morality of abortion, the nature of the doctor-patient relationship, the

promise and peril of new technologies, the scourge of high health care costs,

the goals of medicine, the medical and social challenges of aging, dilemmas

raised by decision-making near the end of life, and the meaning of death.”...

Among his

most important books was “Setting Limits: Medical Goals in an Aging Society”

(1987), which argued for rationing the health care dollars spent on older

Americans.”…

“He urged

his peers and the public to look beyond narrow issues in law and medicine to

broader questions of what it means to live a worthwhile life,” Dr. Appel said

by email.”…

While at

Harvard, Mr. Callahan became disillusioned with philosophy, finding it

irrelevant to the real world. At one point, he wandered over to the Harvard

Divinity School to see if theology might suit him better. As he wrote in his

memoir, “In Search of the Good: A Life in Bioethics” (2012), he concluded that

theologians asked interesting questions but did not work with useful

methodologies, and that philosophers had useful methodologies but asked

uninteresting questions.”

Monday, May 6, 2019

A Facebook request: Write a code of tech ethics; Los Angeles Times, April 30, 2019

Mike Godwin, The Los Angeles Times; A Facebook request: Write a code of tech ethics

"The question isn’t just what rules should a reformed Facebook follow. The bigger question is what all the big tech companies’ relationships with users should look like. The framework needed can’t be created out of whole cloth just by new government regulation; it has to be grounded in professional ethics.

Doctors and lawyers, as they became increasingly professionalized in the 19th century, developed formal ethical codes that became the seeds of modern-day professional practice. Tech-company professionals should follow their example. An industry-wide code of ethics could guide companies through the big questions of privacy and harmful content.

Drawing on Yale law professor Jack Balkin’s concept of “information fiduciaries,” I have proposed that the tech companies develop an industry-wide code of ethics that they can unite behind in implementing their censorship and privacy policies — as well as any other information policies that may affect individuals."

"The question isn’t just what rules should a reformed Facebook follow. The bigger question is what all the big tech companies’ relationships with users should look like. The framework needed can’t be created out of whole cloth just by new government regulation; it has to be grounded in professional ethics.

Doctors and lawyers, as they became increasingly professionalized in the 19th century, developed formal ethical codes that became the seeds of modern-day professional practice. Tech-company professionals should follow their example. An industry-wide code of ethics could guide companies through the big questions of privacy and harmful content.

Drawing on Yale law professor Jack Balkin’s concept of “information fiduciaries,” I have proposed that the tech companies develop an industry-wide code of ethics that they can unite behind in implementing their censorship and privacy policies — as well as any other information policies that may affect individuals."

Subscribe to:

Posts (Atom)