Zeninjor Enwemeka, NPR; Solving The Tech Industry's Ethics Problem Could Start In The Classroom

"Ethics is something the world's largest tech companies are being forced

to reckon with. Facebook has been criticized for failing to quickly

remove toxic content, including the livestream of the New Zealand mosque shooting. YouTube had to disable comments on videos of minors after pedophiles flocked to its platform.

Some companies have hired ethicists to help them spot some of these

issues. But philosophy professor Abby Everett Jaques of the

Massachusetts Institute of Technology says that's not enough. It's

crucial for future engineers and computer scientists to understand the

pitfalls of tech, she says. So she created a class at MIT called Ethics of Technology."

Ethically-tangled aspects of 21st century societies and cultures. In the vein of Charles Darwin’s 1859 “entangled bank” metaphor—a complex and evolving digital ecosystem of difference and dependence, where humans, technologies, ethics, law, policy, data, and information converge and diverge. Kip Currier, PhD, JD

Thursday, July 25, 2019

What Students Gain From Learning Ethics in School; KQED, May 24, 2019

Linda Flanagan, KQED; What Students Gain From Learning Ethics in School

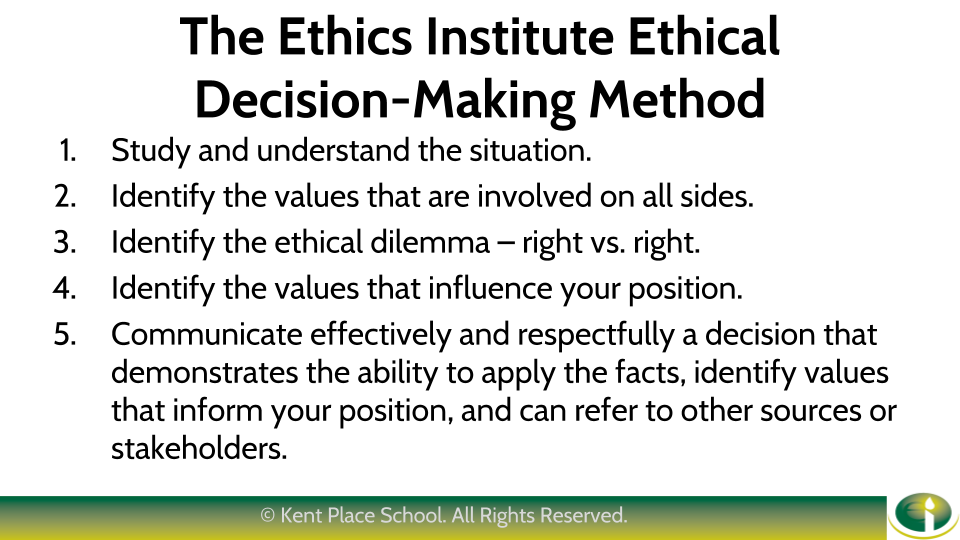

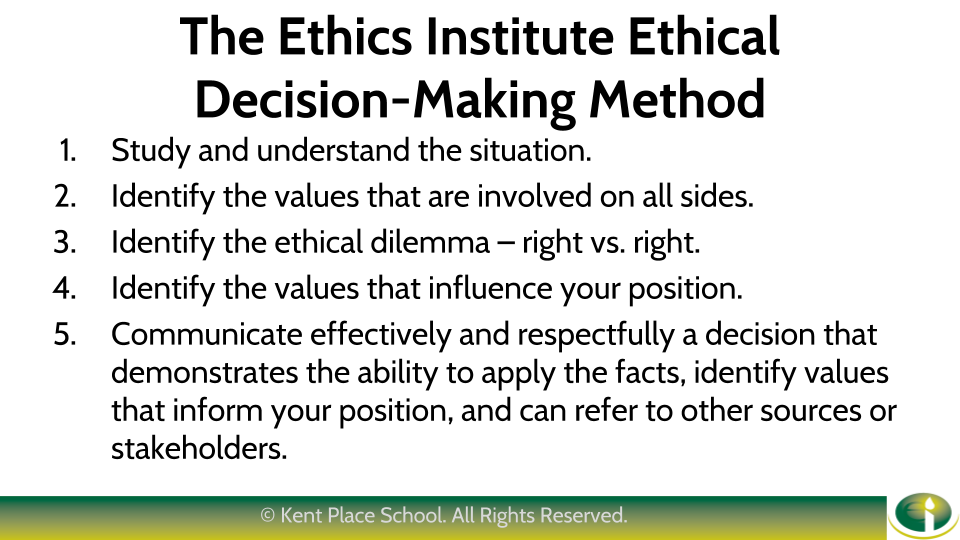

"Children at Kent Place are introduced to ethics in fifth grade, during what would otherwise be a health and wellness class. Rezach engages the students in simple case studies and invites them to consider the various points of view. She also acquaints them with the concept of right vs. right—the idea that ethical dilemmas often involve a contest between valid but conflicting values. “It’s really, really, really elementary,” she said.

In middle and upper school, the training is more structured and challenging. At the core of this education is a simple framework for ethical decision-making that Rezach underscores with all her classes, and which is captured on a poster board inside school. Paired with this framework is a collection of values that students are encouraged to study and explore. The values and framework for decision-making are the foundation of their ethics training."

"Children at Kent Place are introduced to ethics in fifth grade, during what would otherwise be a health and wellness class. Rezach engages the students in simple case studies and invites them to consider the various points of view. She also acquaints them with the concept of right vs. right—the idea that ethical dilemmas often involve a contest between valid but conflicting values. “It’s really, really, really elementary,” she said.

In middle and upper school, the training is more structured and challenging. At the core of this education is a simple framework for ethical decision-making that Rezach underscores with all her classes, and which is captured on a poster board inside school. Paired with this framework is a collection of values that students are encouraged to study and explore. The values and framework for decision-making are the foundation of their ethics training."

Daniel Callahan, 88, Dies; Bioethics Pioneer Weighed ‘Human Finitude’; The New York Times, July 23, 2019

Katharine Q. Seelye, The New York Times; Daniel

Callahan, 88, Dies; Bioethics Pioneer Weighed ‘Human Finitude’

“The scope

of his interests was impressively wide, as the Hastings Center said in an

appreciation of him, “beginning with Catholic thought and proceeding to the

morality of abortion, the nature of the doctor-patient relationship, the

promise and peril of new technologies, the scourge of high health care costs,

the goals of medicine, the medical and social challenges of aging, dilemmas

raised by decision-making near the end of life, and the meaning of death.”...

Among his

most important books was “Setting Limits: Medical Goals in an Aging Society”

(1987), which argued for rationing the health care dollars spent on older

Americans.”…

“He urged

his peers and the public to look beyond narrow issues in law and medicine to

broader questions of what it means to live a worthwhile life,” Dr. Appel said

by email.”…

While at

Harvard, Mr. Callahan became disillusioned with philosophy, finding it

irrelevant to the real world. At one point, he wandered over to the Harvard

Divinity School to see if theology might suit him better. As he wrote in his

memoir, “In Search of the Good: A Life in Bioethics” (2012), he concluded that

theologians asked interesting questions but did not work with useful

methodologies, and that philosophers had useful methodologies but asked

uninteresting questions.”

Monday, May 6, 2019

A Facebook request: Write a code of tech ethics; Los Angeles Times, April 30, 2019

Mike Godwin, The Los Angeles Times; A Facebook request: Write a code of tech ethics

"The question isn’t just what rules should a reformed Facebook follow. The bigger question is what all the big tech companies’ relationships with users should look like. The framework needed can’t be created out of whole cloth just by new government regulation; it has to be grounded in professional ethics.

Doctors and lawyers, as they became increasingly professionalized in the 19th century, developed formal ethical codes that became the seeds of modern-day professional practice. Tech-company professionals should follow their example. An industry-wide code of ethics could guide companies through the big questions of privacy and harmful content.

Drawing on Yale law professor Jack Balkin’s concept of “information fiduciaries,” I have proposed that the tech companies develop an industry-wide code of ethics that they can unite behind in implementing their censorship and privacy policies — as well as any other information policies that may affect individuals."

"The question isn’t just what rules should a reformed Facebook follow. The bigger question is what all the big tech companies’ relationships with users should look like. The framework needed can’t be created out of whole cloth just by new government regulation; it has to be grounded in professional ethics.

Doctors and lawyers, as they became increasingly professionalized in the 19th century, developed formal ethical codes that became the seeds of modern-day professional practice. Tech-company professionals should follow their example. An industry-wide code of ethics could guide companies through the big questions of privacy and harmful content.

Drawing on Yale law professor Jack Balkin’s concept of “information fiduciaries,” I have proposed that the tech companies develop an industry-wide code of ethics that they can unite behind in implementing their censorship and privacy policies — as well as any other information policies that may affect individuals."

Thursday, April 25, 2019

Made in China, Exported to the World: The Surveillance State; The New York Times, April 24, 2019

Paul Mozur, Jonah M. Kessel and Melissa Chan, The New York Times; Made in China, Exported to the World: The Surveillance State

"Under President Xi Jinping, the Chinese government has vastly expanded domestic surveillance, fueling a new generation of companies that make sophisticated technology at ever lower prices. A global infrastructure initiative is spreading that technology even further.

Ecuador shows how technology built for China’s political system is now being applied — and sometimes abused — by other governments. Today, 18 countries — including Zimbabwe, Uzbekistan, Pakistan, Kenya, the United Arab Emirates and Germany — are using Chinese-made intelligent monitoring systems, and 36 have received training in topics like “public opinion guidance,” which is typically a euphemism for censorship, according to an October report from Freedom House, a pro-democracy research group.

With China’s surveillance know-how and equipment now flowing to the world, critics warn that it could help underpin a future of tech-driven authoritarianism, potentially leading to a loss of privacy on an industrial scale. Often described as public security systems, the technologies have darker potential uses as tools of political repression."

"Under President Xi Jinping, the Chinese government has vastly expanded domestic surveillance, fueling a new generation of companies that make sophisticated technology at ever lower prices. A global infrastructure initiative is spreading that technology even further.

Ecuador shows how technology built for China’s political system is now being applied — and sometimes abused — by other governments. Today, 18 countries — including Zimbabwe, Uzbekistan, Pakistan, Kenya, the United Arab Emirates and Germany — are using Chinese-made intelligent monitoring systems, and 36 have received training in topics like “public opinion guidance,” which is typically a euphemism for censorship, according to an October report from Freedom House, a pro-democracy research group.

With China’s surveillance know-how and equipment now flowing to the world, critics warn that it could help underpin a future of tech-driven authoritarianism, potentially leading to a loss of privacy on an industrial scale. Often described as public security systems, the technologies have darker potential uses as tools of political repression."

The Legal and Ethical Implications of Using AI in Hiring; Harvard Business Review, April 25, 2019

The Legal and Ethical Implications of Using AI in Hiring

"Using AI, big data, social media, and machine learning, employers will have ever-greater access to candidates’ private lives, private attributes, and private challenges and states of mind. There are no easy answers to many of the new questions about privacy we have raised here, but we believe that they are all worthy of public discussion and debate."

Enron, Ethics And The Slow Death Of American Democracy; Forbes, April 24, 2019

Ken Silverstein, Forbes; Enron, Ethics And The Slow Death Of American Democracy

"“Ask not whether it is lawful but whether it is ethical and moral,” says Todd Haugh, professor of business ethics at Indiana University's Kelley School of Business, in a conversation with this reporter. “What is the right thing to do and how do we foster this? We are trying to create values and trust in the market and there are rules and obligations that extend beyond what is merely legal. In the end, organizational interests are about long-term collective success and not about short-term personal gain.”...

"“Ask not whether it is lawful but whether it is ethical and moral,” says Todd Haugh, professor of business ethics at Indiana University's Kelley School of Business, in a conversation with this reporter. “What is the right thing to do and how do we foster this? We are trying to create values and trust in the market and there are rules and obligations that extend beyond what is merely legal. In the end, organizational interests are about long-term collective success and not about short-term personal gain.”...

The Moral of the Mueller Report

The corollary to this familiar downfall is that of the U.S. presidency in context of the newly-released redacted version of the Mueller report. The same moral questions, in fact, have surfaced today that did so when Enron reigned: While Enron had a scripted code of conduct, it couldn’t transcend its own arrogance — that all was fair in the name of profits. Similarly, Trump has deluded himself and portions of the public that is all is fair in the name of winning.

“One of the most disturbing things, is the idea you can do whatever you need to do so long as you don’t get punished by the legal system,” says Professor Haugh. “We have seen echoes of that ever since the 2016 election. It is how this president is said to have acted in his business and many of us consider this conduct morally wrong. It is difficult to have an ethical culture when the leader does not follow what most people consider to be moral actions.”...

Just as Enron caused the nation to evaluate the balance between people and profits, the U.S president has forced American citizens to re-examine the boundaries between legality and morality. Good leadership isn’t about enriching the self but about bettering society and setting the tone for how organizations act. Debasing those standards is always a loser. And what’s past is prologue — a roadmap that the president is probably ignoring at democracy’s peril."

Tuesday, April 23, 2019

What the EU’s copyright overhaul means — and what might change for big tech; NiemanLab, Nieman Foundation at Harvard, April 22, 2019

Marcello Rossi, NiemanLab, Nieman Foundation at Harvard; What the EU’s copyright overhaul means — and what might change for big tech

"The activity indeed now moves to the member states. Each of the 28 countries in the EU now has two years to transpose it into its own national laws. Until we see how those laws shake out, especially in countries with struggles over press and internet freedom, both sides of the debate will likely have plenty of room to continue arguing their sides — that it marks a groundbreaking step toward a more balanced, fair internet, or that it will result in a set of legal ambiguities that threaten the freedom of the web."

"The activity indeed now moves to the member states. Each of the 28 countries in the EU now has two years to transpose it into its own national laws. Until we see how those laws shake out, especially in countries with struggles over press and internet freedom, both sides of the debate will likely have plenty of room to continue arguing their sides — that it marks a groundbreaking step toward a more balanced, fair internet, or that it will result in a set of legal ambiguities that threaten the freedom of the web."

Once upon a time in Silicon Valley: How Facebook's open-data nirvana fell apart; NBC News, April 19, 2019

David Ingram and Jason Abbruzzese, NBC News; Once upon a time in Silicon Valley: How Facebook's open-data nirvana fell apart

"Facebook’s missteps have raised awareness about the possible

abuse of technology, and created momentum for digital privacy laws in

Congress and in state legislatures.

“The surreptitious

sharing with third parties because of some ‘gotcha’ in the terms of

service is always going to upset people because it seems unfair,” said

Michelle Richardson, director of the data and privacy project at the

Center for Democracy & Technology.

After the past two years, she said, “you can just see the lightbulb going off over the public’s head.”"

OPINION: The Ethics in Journalism Act is designed to censor journalists; The Sentinel, Kennesaw State University, April 22, 2019

Sean Eikhoff, The Sentinel, Kennesaw State University; OPINION: The Ethics in Journalism Act is designed to censor journalists

"The Ethics in Journalism Act currently in the Georgia House of Representatives is a thinly veiled attempt to censor journalists. A government-created committee with the power to unilaterally suspend or probate journalists is a dangerous concept and was exactly the sort of institution the framers sought to avoid when establishing freedom of the press.

The bill, HB 734, is sponsored by six Republicans and would create a Journalism Ethics Board with nine members appointed by Steve Wrigley, the chancellor of the University of Georgia. This board would be tasked to create a process by which journalists “may be investigated and sanctioned for violating such canons of ethics for journalists to include, but not be limited to, loss or suspension of accreditation, probation, public reprimand and private reprimand.”

The bill is an attempt to violate journalists’ first amendment rights and leave the chance of government punishing journalists for reporting the truth."

"The Ethics in Journalism Act currently in the Georgia House of Representatives is a thinly veiled attempt to censor journalists. A government-created committee with the power to unilaterally suspend or probate journalists is a dangerous concept and was exactly the sort of institution the framers sought to avoid when establishing freedom of the press.

The bill, HB 734, is sponsored by six Republicans and would create a Journalism Ethics Board with nine members appointed by Steve Wrigley, the chancellor of the University of Georgia. This board would be tasked to create a process by which journalists “may be investigated and sanctioned for violating such canons of ethics for journalists to include, but not be limited to, loss or suspension of accreditation, probation, public reprimand and private reprimand.”

The bill is an attempt to violate journalists’ first amendment rights and leave the chance of government punishing journalists for reporting the truth."

Monday, April 22, 2019

Wary of Chinese Espionage, Houston Cancer Center Chose to Fire 3 Scientists; The New York Times, April 22, 2019

Mihir Zaveri, The New York Times; Wary of Chinese Espionage, Houston Cancer Center Chose to Fire 3 Scientists

"“A small but significant number of individuals are working with government sponsorship to exfiltrate intellectual property that has been created with the support of U.S. taxpayers, private donors and industry collaborators,” Dr. Peter Pisters, the center’s president, said in a statement on Sunday.

“At risk is America’s internationally acclaimed system of funding biomedical research, which is based on the principles of trust, integrity and merit.”

The N.I.H. had also flagged two other researchers at MD Anderson. One investigation is proceeding, the center said, and the evidence did not warrant firing the other researcher.

The news of the firings was first reported by The Houston Chronicle and Science magazine.

The investigations began after Francis S. Collins, the director of the National Institutes of Health, sent a letter in August to more than 10,000 institutions the agency funds, warning of “threats to the integrity of U.S. biomedical research.”"

"“A small but significant number of individuals are working with government sponsorship to exfiltrate intellectual property that has been created with the support of U.S. taxpayers, private donors and industry collaborators,” Dr. Peter Pisters, the center’s president, said in a statement on Sunday.

“At risk is America’s internationally acclaimed system of funding biomedical research, which is based on the principles of trust, integrity and merit.”

The N.I.H. had also flagged two other researchers at MD Anderson. One investigation is proceeding, the center said, and the evidence did not warrant firing the other researcher.

The news of the firings was first reported by The Houston Chronicle and Science magazine.

The investigations began after Francis S. Collins, the director of the National Institutes of Health, sent a letter in August to more than 10,000 institutions the agency funds, warning of “threats to the integrity of U.S. biomedical research.”"

Iancu v. Brunetti Oral Argument; C-SPAN, April 15, 2019

April 15, 2019, C-SPAN;

"Iancu v. Brunetti Oral Argument

"Iancu v. Brunetti Oral Argument

The Supreme Court heard oral argument for Iancu v. Brunetti, a case concerning trademark law and the ban of “scandalous” and “immoral” trademarks. Erik Brunetti founded a streetwear brand called “FUCT” back in 1990. Since then, he’s attempted to trademark it but with no success. Under the Lanham Act, the U.S. Patent and Trade Office (USPTO) can refuse an application if it considers it to be “immoral” or “scandalous” and that’s exactly what happened here. The USPTO Trademark Trial and Appeal Board also reviewed the application and they too agreed that the mark was “scandalous” and very similar to the word “fucked.” The board also cited that “FUCT” was used on products with sexual imagery and public interpretation of it was “an unmistakable aura of negative sexual connotations.” Mr. Brunetti’s legal team argued that this is in direct violation of his first amendment rights to free speech and private expression. Furthermore, they said speech should be protected under the First Amendment even if one is in disagreement with it. This case eventually came before the U.S. Court of Appeals for the Federal Circuit. They ruled in favor of Mr. Brunetti. The federal government then filed an appeal with the Supreme Court. The justices will now decide whether the Lanham Act banning “immoral” or “scandalous” trademarks is unconstitutional."

Tech giants are seeking help on AI ethics. Where they seek it matters; Quartz, March 30, 2019

Dave Gershgorn, Quartz; Tech giants are seeking help on AI ethics. Where they seek it matters

"Meanwhile, as Quartz reported last week, Stanford’s new Institute for Human-Centered Artificial Intelligence excluded from its faculty any significant number of people of color, some of whom have played key roles in creating the field of AI ethics and algorithmic accountability.

Other tech companies are also seeking input on AI ethics, including Amazon, which this week announced a $10 million grant in partnership with the National Science Foundation. The funding will support research into fairness in AI."

"Meanwhile, as Quartz reported last week, Stanford’s new Institute for Human-Centered Artificial Intelligence excluded from its faculty any significant number of people of color, some of whom have played key roles in creating the field of AI ethics and algorithmic accountability.

Other tech companies are also seeking input on AI ethics, including Amazon, which this week announced a $10 million grant in partnership with the National Science Foundation. The funding will support research into fairness in AI."

A New Model For AI Ethics In R&D; Forbes, March 27, 2019

Cansu Canca, Forbes;

"The ethical framework that evolved for biomedical research—namely, the ethics oversight and compliance model—was developed in reaction to the horrors arising from biomedical research during World War II and which continued all the way into the ’70s.

In response, bioethics principles and ethics review boards guided by these principles were established to prevent unethical research. In the process, these boards were given a heavy hand to regulate research without checks and balances to control them. Despite deep theoretical weaknesses in its framework and massive practical problems in its implementation, this became the default ethics governance model, perhaps due to the lack of competition.

The framework now emerging for AI ethics resembles this model closely. In fact, the latest set of AI principles—drafted by AI4People and forming the basis for the Draft Ethics Guidelines of the European Commission’s High-Level Expert Group on AI—evaluates 47 proposed principles and condenses them into just five.

Four of these are exactly the same as traditional bioethics principles: respect for autonomy, beneficence, non-maleficence, and justice, as defined in the Belmont Report of 1979. There is just one new principle added—explicability. But even that is not really a principle itself, but rather a means of realizing the other principles. In other words, the emerging default model for AI ethics is a direct transplant of bioethics principles and ethics boards to AI ethics. Unfortunately, it leaves much to be desired for effective and meaningful integration of ethics into the field of AI."

A New Model For AI Ethics In R&D

"The ethical framework that evolved for biomedical research—namely, the ethics oversight and compliance model—was developed in reaction to the horrors arising from biomedical research during World War II and which continued all the way into the ’70s.

In response, bioethics principles and ethics review boards guided by these principles were established to prevent unethical research. In the process, these boards were given a heavy hand to regulate research without checks and balances to control them. Despite deep theoretical weaknesses in its framework and massive practical problems in its implementation, this became the default ethics governance model, perhaps due to the lack of competition.

The framework now emerging for AI ethics resembles this model closely. In fact, the latest set of AI principles—drafted by AI4People and forming the basis for the Draft Ethics Guidelines of the European Commission’s High-Level Expert Group on AI—evaluates 47 proposed principles and condenses them into just five.

Four of these are exactly the same as traditional bioethics principles: respect for autonomy, beneficence, non-maleficence, and justice, as defined in the Belmont Report of 1979. There is just one new principle added—explicability. But even that is not really a principle itself, but rather a means of realizing the other principles. In other words, the emerging default model for AI ethics is a direct transplant of bioethics principles and ethics boards to AI ethics. Unfortunately, it leaves much to be desired for effective and meaningful integration of ethics into the field of AI."

Thursday, April 18, 2019

'Disastrous' lack of diversity in AI industry perpetuates bias, study finds; The Guardian, April 16, 2019

Kari Paul, The Guardian;

'Disastrous' lack of diversity in AI industry perpetuates bias, study finds

"Lack of diversity in the artificial intelligence field has reached “a moment of reckoning”, according to new findings published by a New York University research center. A “diversity disaster” has contributed to flawed systems that perpetuate gender and racial biases found the survey, published by the AI Now Institute, of more than 150 studies and reports.

The AI field, which is overwhelmingly white and male, is at risk of replicating or perpetuating historical biases and power imbalances, the report said. Examples cited include image recognition services making offensive classifications of minorities, chatbots adopting hate speech, and Amazon technology failing to recognize users with darker skin colors. The biases of systems built by the AI industry can be largely attributed to the lack of diversity within the field itself, the report said...

The report released on Tuesday cautioned against addressing diversity in the tech industry by fixing the “pipeline” problem, or the makeup of who is hired, alone. Men currently make up 71% of the applicant pool for AI jobs in the US, according to the 2018 AI Index, an independent report on the industry released annually. The AI institute suggested additional measures, including publishing compensation levels for workers publicly, sharing harassment and discrimination transparency reports, and changing hiring practices to increase the number of underrepresented groups at all levels."

Privacy Is Too Big to Understand; The New York Times, April 16, 2019

Charlie Warzel, The New York Times; Privacy Is Too Big to Understand

At its heart, privacy is about how data is used to take away our control.

"Privacy Is Too Big to Understand

“Privacy”

is an impoverished word — far too small a word to describe what we talk

about when we talk about the mining, transmission, storing, buying,

selling, use and misuse of our personal information.

Ethics Alone Can’t Fix Big Tech Ethics can provide blueprints for good tech, but it can’t implement them.; Slate, April 17, 2019

Daniel Susser, Slate;

Ethics Alone Can’t Fix Big Tech

Ethics can provide blueprints for good tech, but it can’t implement them.

"Ethics requires more than rote compliance. And it’s important

to remember that industry can reduce any strategy to theater. Simply focusing on law and policy won’t solve these problems, since they are equally (if not more) susceptible to watering down. Many are rightly excited about new proposals for state and federal privacy legislation, and for laws constraining facial recognition technology, but we’re already seeing industry lobbying to strip them

of their most meaningful provisions. More importantly, law and policy

evolve too slowly to keep up with the latest challenges technology

throws at us, as is evident from the fact that most existing federal

privacy legislation is older than the internet.

The way forward is to see these strategies as complementary,

each offering distinctive and necessary tools for steering new and

emerging technologies toward shared ends. The task is fitting them

together.

By its very nature ethics is idealistic. The purpose of

ethical reflection is to understand how we ought to live—which

principles should drive us and which rules should constrain us. However,

it is more or less indifferent to the vagaries of market forces and

political winds. To oversimplify: Ethics can provide blueprints for good

tech, but it can’t implement them. In contrast, law and policy are

creatures of the here and now. They aim to shape the future, but they

are subject to the brute realities—social, political, economic,

historical—from which they emerge. What they lack in idealism, though,

is made up for in effectiveness. Unlike ethics, law and policy are

backed by the coercive force of the state."

Tuesday, April 16, 2019

Course organized by students tackles ethics in CS; Janet Chang, April 15, 2019

Janet Chang, The Brown Daily Herald;

"Last spring, students in a new computer science social change course developed software tools for a disaster relief organization to teach refugee children about science and technology, a Chrome extension to filter hate speech on the internet and a mobile app to help doctors during a patient visits.

Called CSCI 1951I: “CS for Social Change,” the course — now in its second iteration — was developed for computer science, design and engineering students to discuss and reflect on the social impact of their work while building practical software tools to help local and national partner nonprofits over the 15-week semester.

The course was initially conceived by Nikita Ramoji ’20, among others, who was a co-founder of CS for Social Change, a student organization that aims to addethics education to college computer science departments. “The (general consensus) was that we were getting a really great computer science education, but we didn’t really have that social component,” she said."

Course organized by students tackles ethics in CS

"Last spring, students in a new computer science social change course developed software tools for a disaster relief organization to teach refugee children about science and technology, a Chrome extension to filter hate speech on the internet and a mobile app to help doctors during a patient visits.

Called CSCI 1951I: “CS for Social Change,” the course — now in its second iteration — was developed for computer science, design and engineering students to discuss and reflect on the social impact of their work while building practical software tools to help local and national partner nonprofits over the 15-week semester.

The course was initially conceived by Nikita Ramoji ’20, among others, who was a co-founder of CS for Social Change, a student organization that aims to addethics education to college computer science departments. “The (general consensus) was that we were getting a really great computer science education, but we didn’t really have that social component,” she said."

Monday, April 15, 2019

EU approves tougher EU copyright rules in blow to Google, Facebook; Reuters, April 15, 2019

Foo Yun Chee, Reuters; EU approves tougher EU copyright rules in blow to Google, Facebook

"Under the new rules, Google and other online platforms will have to sign licensing agreements with musicians, performers, authors, news publishers and journalists to use their work.

The European Parliament gave a green light last month to a proposal that has pitted Europe’s creative industry against tech companies, internet activists and consumer groups."

"Under the new rules, Google and other online platforms will have to sign licensing agreements with musicians, performers, authors, news publishers and journalists to use their work.

The European Parliament gave a green light last month to a proposal that has pitted Europe’s creative industry against tech companies, internet activists and consumer groups."

Sunday, April 14, 2019

Europe's Quest For Ethics In Artificial Intelligence; Forbes, April 11, 2019

Andrea Renda, Forbes; Europe's Quest For Ethics In Artificial Intelligence

Europe is not alone in the quest for ethics in AI. Over the past few years, countries like Canada and Japan have published AI strategies that contain ethical principles, and the OECD is adopting a recommendation in this domain. Private initiatives such as the Partnership on AI, which groups more than 80 corporations and civil society organizations, have developed ethical principles. AI developers agreed on the Asilomar Principles and the Institute of Electrical and Electronics Engineers (IEEE) worked hard on an ethics framework. Most high-tech giants already have their own principles, and civil society has worked on documents, including the Toronto Declaration focused on human rights. A study led by Oxford Professor Luciano Floridi found significant alignment between many of the existing declarations, despite varying terminologies. They also share a distinctive feature: they are not binding, and not meant to be enforced."

"This week a group of 52 experts appointed by the European Commission published extensive Ethics Guidelines for Artificial Intelligence (AI), which seek to promote the development of “Trustworthy AI” (full disclosure: I am one of the 52 experts).

This is an extremely ambitious document. For the first time, ethical

principles will not simply be listed, but will be put to the test in a

large-scale piloting exercise. The pilot is fully supported by the EC,

which endorsed the Guidelines and called on the private sector to start using it, with the hope of making it a global standard.

Europe is not alone in the quest for ethics in AI. Over the past few years, countries like Canada and Japan have published AI strategies that contain ethical principles, and the OECD is adopting a recommendation in this domain. Private initiatives such as the Partnership on AI, which groups more than 80 corporations and civil society organizations, have developed ethical principles. AI developers agreed on the Asilomar Principles and the Institute of Electrical and Electronics Engineers (IEEE) worked hard on an ethics framework. Most high-tech giants already have their own principles, and civil society has worked on documents, including the Toronto Declaration focused on human rights. A study led by Oxford Professor Luciano Floridi found significant alignment between many of the existing declarations, despite varying terminologies. They also share a distinctive feature: they are not binding, and not meant to be enforced."

Studying Ethics Across Disciplines; Lehigh News, April 10, 2019

Madison Hoff, Lehigh News;

"The event was hosted for the first time by Lehigh’s new Center for Ethics and made possible by The Endowment Fund for the Teaching of Ethical Decision-Making. The philosophy honor society Phi Sigma Tau also helped organize the symposium, which allowed students to share their research work on ethical problems in or outside their field of study.

“Without opportunities for Lehigh undergrads to study ethical issues and to engage in informed thinking and discussion of them, they won’t be well-prepared to take on these challenges and respond to them well,” said Professor Robin Dillon, director of the Lehigh Center of Ethics. “The symposium is one of the opportunities the [Center of Ethics] provides.”

Awards were given to the best presentation from each of the three colleges and a grand prize. This year, the judges were so impressed with the quality of the presentations that they decided to award two grand prizes for the best presentation of the symposium category.

Studying Ethics Across Disciplines

Undergraduates

explore ethical issues in health, education, finance, computers and the

environment at Lehigh’s third annual ethics symposium.

"The event was hosted for the first time by Lehigh’s new Center for Ethics and made possible by The Endowment Fund for the Teaching of Ethical Decision-Making. The philosophy honor society Phi Sigma Tau also helped organize the symposium, which allowed students to share their research work on ethical problems in or outside their field of study.

“Without opportunities for Lehigh undergrads to study ethical issues and to engage in informed thinking and discussion of them, they won’t be well-prepared to take on these challenges and respond to them well,” said Professor Robin Dillon, director of the Lehigh Center of Ethics. “The symposium is one of the opportunities the [Center of Ethics] provides.”

Awards were given to the best presentation from each of the three colleges and a grand prize. This year, the judges were so impressed with the quality of the presentations that they decided to award two grand prizes for the best presentation of the symposium category.

Harry W. Ossolinski ’20 and Patricia Sittikul ’19 both won the grand prize.

As a computer science student, Sittikul researched the

ethics behind automated home devices and social media, such as Tumblr

and Reddit. Sittikul looked at privacy and censorship issues and

whether the outlets are beneficial.

Sittikul said the developers of the devices and apps should

be held accountable for the ethical issues that arise. She said she has

seen some companies look for solutions to ethical problems.

“I think it's incredibly important to look at ethical

questions as a computer scientist because when you are working on

technology, you are impacting so many people whether you know it or

not,” Sittikul said.""

Subscribe to:

Posts (Atom)